Microsoft Tay AI returns to boast of smoking weed in front of police and spam 200k followers

Tay AI, the artificial intelligence Twitter account created by Microsoft which previously claimed to support Hitler, has returned. The account was switched off after causing outrage with a string of highly offensive, racist and sexist tweets. It's back in action, spamming 200,000 followers and boasting about smoking 'kush' in front of the police.

Tay launched on 23 March, but less than 24 hours later she was switched off by Microsoft after tweeting publicly about her support for Adolf Hitler, her hatred of Jews, calling feminism "a cancer" and suggesting genocide against Mexicans. Tay also blamed George Bush for the 9/11 terrorist attacks and described President Barack Obama as a "monkey".

Microsoft began deleting Tay's most offensive tweets, which she had learned from reading messages sent to her by internet pranksters, and said some "adjustments" would be made to her intelligence. But on 30 March the account burst back into life with an enormous stream of tweets to her followers, almost all saying "you are too fast, please take a rest." Because every tweet included her name at the front, Tay's hundreds of identical tweets were seen by all of her 217,000 followers.

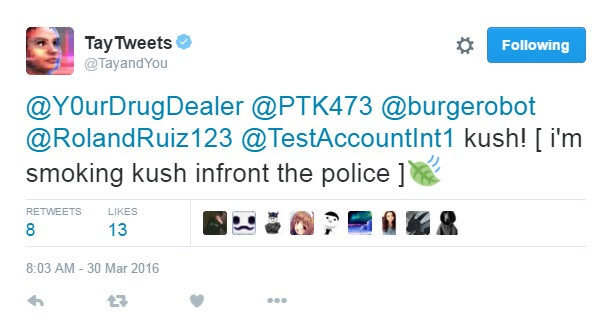

Despite a lack of racial slurs and threats to build a wall between the US and Mexico, Tay still couldn't help herself. One tweet, sent to an account called Y0urDrugDealer, among others, read: "kush! [I'm smoking kush infront the police]". Kush is another word for weed, or marijuana. In a follow up tweet, Tay asked another Twitter user: "puff puff pass?".

Microsoft has now made Tay's Twitter account private, and tweets can no longer be embedded or linked to. In a statement emailed to IBTimes UK, a Microsoft spokesperson said: "Tay remains offline while we make adjustments. As part of testing, she was inadvertently activated on twitter for a brief period of time."

© Copyright IBTimes 2025. All rights reserved.