Why the Turing Test is Not an Adequate Way to Calculate Artificial Intelligence

You may have heard that a supercomputer succeeded in passing the Turing Test over the weekend at a Royal Society competition, but scientists say this is not really a milestone for artificial intelligence at all.

The experiment consisted of five pairs of computers and humans holding keyboard conversations in six separate sessions simultaneously. In each session, five different judges were tasked with trying to identify which answers came from computers, and which answers came from humans.

Since "Eugene Goostman", a computer program simulating a 13-year-old boy from Ukraine succeeded in fooling the 10 of the 30 judges, i.e. 33% of them, the event's organisers have declared that it has passed the Turing Test, but the concept of the test itself is in question.

What exactly is the Turing Test?

One of the founding fathers of modern computing, in 1950, wartime code breaker Alan Turing published a paper considering the question "Can machines think?"

In the paper, Turing mentioned the word "test", and stated that he believed that by the year 2000, computers might be able to be programmed to imitate humans so well that they would be able to fool an "average interrogator" for five minutes:

"I believe that in about fifty years' time it will be possible, to programme computers, with a storage capacity of about 10<sup>9 to make them play the imitation game so well that an average interrogator will not have more than 70% chance of making the right identification after five minutes of questioning."

"He [made] predictions about the size, memory and speed of computers that are surprisingly accurate, and he thought that by the year 2000, 30% [of people] would be unable to tell which was which," Aaron Sloman, a professor of artificial intelligence and cognitive science at University of Birmingham tells IBTimes UK.

"Now, he didn't say that this would prove that machines are intelligent. He said that many people have published arguments about [the fact that machines can't think], and he decided to knock down each one.

"He only set up the test to provide a framework for refuting those arguments. He called it the "imitation game" and lots of people started referring to it as a test, but if you read the paper, it's clear that he doesn't think that this can be sensibly provided."

It's only a chatbot

The key problem that many scientists have with the Eugene experiment is that the "supercomputer" used to fool the judges was essentially running a chatbot.

If you've ever talked to a chatbot (see Time's 5-minute chat with Goostman or try talking to him yourself), you've probably noticed that the computer tries to detect words in what you say and respond to them, but quite often misses the mark, and you can detect patterns in its responses:

IBTimes UK: "How are you?"

Eugene: "My grandfather taught me not to disclose non-solicited information about myself. Like on the question "Do you have watch" -- never tell time, but answer "Yes" or "No". I always follow his advice. Oh, what a fruitful conversation;-)"

If Eugene were really a Ukrainian teenager who spoke English as a second language, it's doubtful that his response would be so eloquent. Also, who talks like this on IM?

"The claim that this chatbot has passed the Turing Test is ridiculous," Murray Shanahan, a professor of cognitive robotics from Imperial College tells IBTimes UK.

"I don't know the technical details of 'Eugene Goostman', but it's extremely unlikely that it needs a supercomputer to run it, or that any of the world's genuine supercomputers would allocate their time for a chatbot competition when they have things like weather forecasting to do."

How should we be calculating artificial intelligence then?

Sloman, who was one of the judges at the Royal Society experiment, says that it is difficult to test whether a computer is capable of human intelligence as human brains have a complex way of learning new skills.

He suggests that it would be better to test a single design which would enable many different machines sharing that design (something like the human genome) to develop in different environments, learning many things including music, mathematics and a host of practical competences.

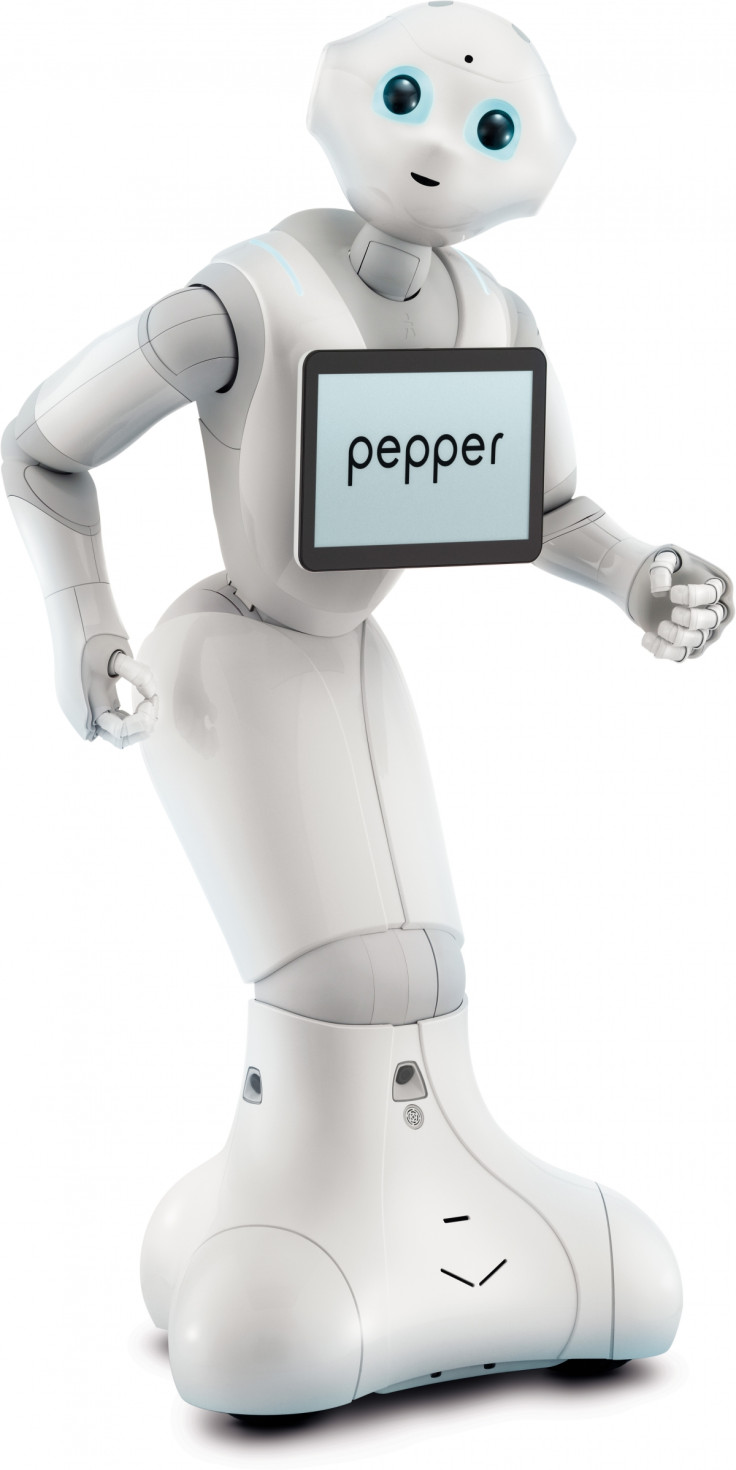

In order to judge if a computer could learn a primitive skill such as hunting, the technology would need to be built into a robot with sensors, as animals interact and learn by using their senses, not through verbal interactions.

If the same design could exhibit that diversity, it might be a step towards a theory of human nature/intelligence.

"We don't yet know the way people think. Many people don't realise how complex that learning ability has to be. When you learn to talk, you go through different stages. Later on, you learn to write, or ride a bicycle, and all of these skills require different learning abilities," says Sloman.

"Ten year-olds cannot do what 16-year-olds can do, so they're learning to learn. This suggests that instead of being one learning system in a newborn human, it's something much more complicated, different layers that come into operation at different stages of life."

Sloman goes on to mention the sophisticated imaging system that our brains have, which enable us to not only perceive an item, but to take in with a glance, whether the item is made of a robust or fragile material.

Shanahan agrees: "Much of our intelligence (and that of other animals) concerns how we interact with the physical world, something that the Turing Test explicitly takes no account of. [The test] over-emphasises language at the expense of the issue of embodiment."

Sloman is currently working on a project about what Alan Turing might have done had he lived longer, particularly extending his ideas on morphogenesis and discrete computation, and is looking for contributors to join him in his research.

© Copyright IBTimes 2025. All rights reserved.