After being exploited by Russian troll farms, Facebook takes new steps to fight 'fake news'

For the largest social network in the world, truth has become a major selling point.

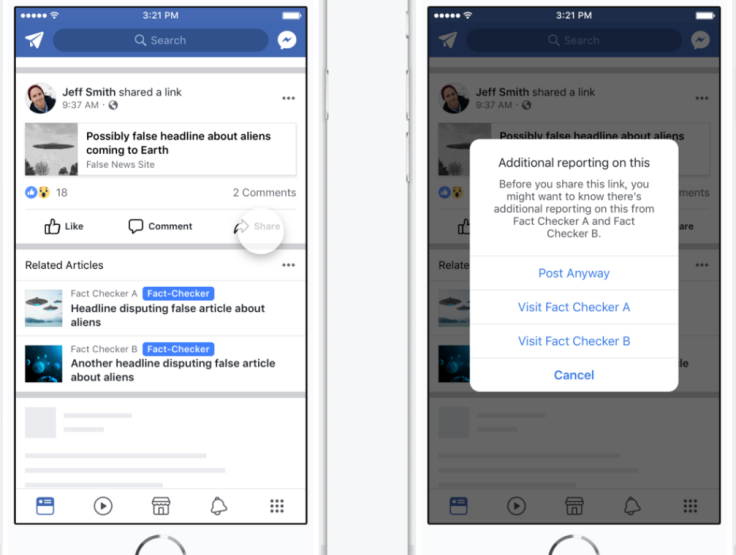

Facebook is changing how it identifies "fake news" for users, dropping its 'disputed flags' in favour of 'related articles' that add context to stories on the social network.

In a blog post published Wednesday (20 December), the California-headquartered tech giant revealed that it has also started a new initiative to better understand how its users "decide whether information is accurate or not based on the news sources they depend upon."

Facebook launched disputed flags – among other anti-misinformation features – in mid-December last year.

At the time, it partnered with external fact-checking organisations to review articles and used teams of staff to flag any content that may not be accurate.

Now, it believes the glaring icons may have been the wrong move – with some academic research suggesting that strong visualisations - large red flags, for example - can actually work to further entrench beliefs.

The social network said that related articles, in contrast, are more effective at showing the broader picture.

"We've found that when we show related articles next to a false news story, it leads to fewer shares than when the disputed flag is shown," wrote Facebook product manager Tessa Lyons.

"By showing related articles rather than disputed flags we can help give people better context. And understanding how people decide what's false and what's not will be crucial to our success over time. Please keep giving us your feedback because we'll be redoubling our efforts in 2018."

Facebook has come under fire in recent months – alongside Google and Twitter – after being exploited by Russia during the 2016 US presidential election. It has admitted being used by "troll farms", which purchased paid advertising in order to circulate targeted propaganda.

In a joint Medium post published Wednesday, three Facebook engineers – product designer Jeff Smith, user experience researcher Grace Jackson, and content strategist Seetha Raj – said they had learned from experience, and claimed that reducing false news is now a top priority.

"We learned that dispelling misinformation is challenging," they wrote. "Just because something is marked as 'false' or 'disputed' doesn't necessarily mean we will be able to change someone's opinion about its accuracy." Untruth now comes in many forms, they noted, from politics to memes.

Some of it, controversially, is sometimes spread by the most powerful man in the world.