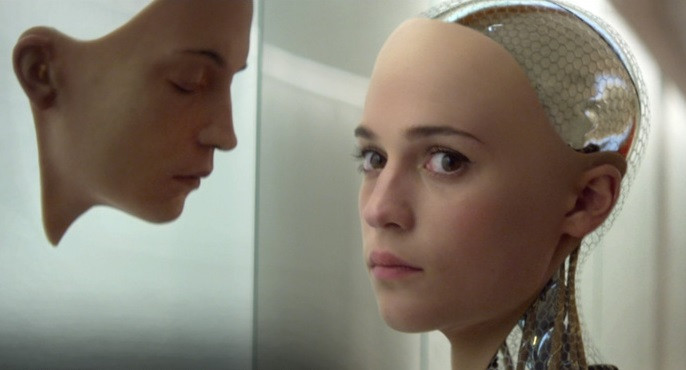

AI should be 'human-like' and capable of empathy to avoid existential threat to mankind

The idea that a computer could be conscious is an unsettling one. It doesn't seem natural for a man-made machine to be able to feel pain, to understand the depths of regret, or be capable of experiencing an ethereal sense of hope.

But some experts are now claiming that this is exactly the type of artificial intelligence (AI) we will need to develop if we are to quell the existential threat that this nascent technology poses.

Speaking in Cambridge on Friday (20 February) Murray Shanahan, professor of cognitive robotics at Imperial College London, said that in order to nullify this threat any "human-level AI" - or artificial general intelligence (AGI) - should also be "human-like".

Shanahan suggested that if forces driving us towards the development of human-level AI are unstoppable, then there are two options. Either a potentially dangerous AGI based on a ruthless optimisation process with no moral reasoning is developed, or an AGI is created based on the psychological and perhaps even neurological blueprint of humans.

"Right now my vote is for option two, in the hope that it will lead to a form of harmonious co-existence (with humanity)," Shanahan said.

'The end of the human race'

Together with Stephen Hawking and Elon Musk, Shanahan helped in advising the Centre for the Study of Existential Risk (CSER) on an open letter calling for more research into AI in order to avoid the "potential pitfalls" of AI development.

According to Musk, these pitfalls could be "more dangerous than nukes", while Hawking has suggested that it could lead to the end of humanity.

"The primitive forms of artificial intelligence we already have, have proved very useful," Hawking said in December 2014. "But I think the development of full artificial intelligence could spell the end of the human race."

How far we are away from an AGI being developed that is capable of testing these fears is uncertain, with predictions ranging anywhere from 15 years to 100 years from now. Shanahan set the year 2100 as a time when AGI would be "increasingly likely but still not certain".

Whether or not it's possible, the danger lies in which forces drive the development route of AGI.

Capitalist forces could create 'risky things'

It is feared that the current social, economic and political forces driving us towards human-level AI are leading to the development of the more dangerous first option of AGI as hypothesized by Shanahan.

"Capitalist forces will drive incentive to produce ruthless maximisation processes. With this there is the temptation to develop risky things," Shanahan said, giving the example of companies or governments using AGI to subvert markets, rig elections or create new automated and potentially uncontrollable military technologies.

"Within the military sphere governments will build these things just in case the others do it, so it's a very difficult process to stop," he said.

Despite these dangers, Shanahan believes it would be premature to ban any form of AI research, as there is currently no evidence to suggest that we will actually reach this point. Instead it is a matter of pointing the research in the right direction.

Mimicking the mind to create homeostasis

An AGI focussed solely on optimisation will not necessarily be intentionally malicious towards humans. However, the fact that it may have convergent instrumental goals, such as self-preservation or resource acquisition, is enough to pose a significant risk.

As AI theorist Eliezer Yudowsky pointed out in a 2008 paper on artificial intelligence, "the AI neither hates you nor loves you, but you are made out of atoms that it can use for something else."

By creating some form of homeostasis within the AGI, Shanahan believes that the potential of AI can be realised without destroying civilisation as we know it. For an AGI to be able to understand the world in the same way as humans do it would involve certain pre-requisites, including the capacity to recognize others, the ability to form relationships, the ability to communicate, and empathy.

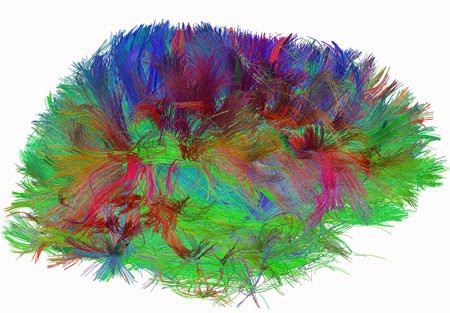

One way of creating a human-like machine is through mimicking the human brain in its design, as Shanahan pointed out, "we know the human brain can achieve this". Scientists are, however, still some way from even mapping the brain, let alone replicating it.

The Human Connectome Project (HCP) is currently working towards reverse engineering the brain and aims to be finished by Q3 2015, though analysis of the data gathered will continue well beyond 2015.

"Our project could have long range implications for the development of artificial intelligence in that our project is part of the many efforts to understand how the brain is structurally organized and how the different regions of the brain work together functionally in different situations and tasks," Jennifer Elam of the HCP told IBTimes UK.

"There hasn't been a lot of connection between the brain mapping and AI fields to date, mainly because the two fields have been approaching the problem of understanding the brain from different angles and at different levels.

"As the HCP data analysis continues and general features emerge in publications, it is likely that some brain modellers will incorporate its findings to the extent possible into their computational structure and algorithms."

Whether this project proves to be useful in assisting AI researchers remains to be seen but together with other initiatives, like the Human Brain Project, it could provide an important basis for developing a human-like machine.

For now Shanahan claims that we should at least be aware of the danger that AI development poses, while not paying too much heed to Hollywood films or scaremongering media reports that tend to only obfuscate the issue.

"We need to be thinking about these risks and devote some resources to be thinking about this issue," Shanahan said. "Hopefully we've got many decades to go through these possibilities."

© Copyright IBTimes 2025. All rights reserved.