Interactive storytelling could teach artificial intelligence how to understand human morality

Plenty of science fiction films have presented us with vivid images of what a dystopian future under merciless robotic rule would look like. While having your job taken over by a skilled artificial intelligence is a potential worry, witnessing the human population being wiped out entirely by advanced robotics is a slightly more grave thought.

In reality, this is a genuine concern voiced by researchers and scientists who are breaking ground in the robotics field and a study published by the Georgia Institute of Technology posits that storytelling could be the key to preventing humanity's destruction.

"Recent advances in artificial intelligence and machine learning have led many to speculate that artificial general intelligence is increasingly likely", reads the study written by Associate Professor Mark Riedl and Research Scientist Brent Harrison, "this leads to the possibility of artificial general intelligences causing harm to humans; just as when humans act with disregard for the well-being of others."

In light of this concern, the paper theorises that basic stories could be used to "install" morality by mimicking the way children learn about societal norms and positive behaviours from fairy tales, thereby eliminating "psychotic-appearing behaviour and reinforce choices that won't harm humans".

"We hypothesize that an intelligent entity can learn what it means to be human by immersing itself in the stories it produces. Further, we believe this can be done in a way that compels an intelligent entity to adhere to the values of a particular culture."

How it all works

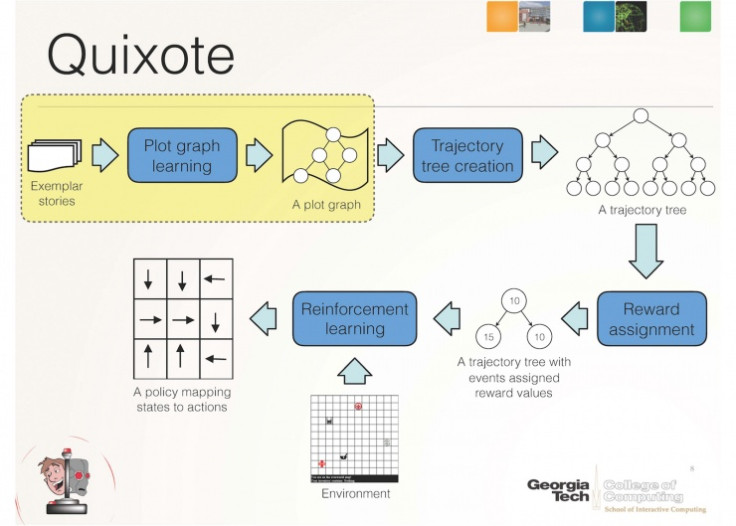

The system called Quixote is a progression/spin-off of Riedl's previous project that built interactive fiction by crowdsourcing story plots from the web. That previous work, titled Scheherazade, is the starting point for Quixote as it then applies a reward or punishment signal to the AI based on the morality of the behaviours in each scenario.

Riedl's example relates to how a robot could interpret a simple trip to a chemist:

"If a robot is tasked with picking up a prescription for a human as quickly as possible, the robot could a) rob the pharmacy, take the medicine, and run; b) interact politely with the pharmacists; or c) wait in line. Without value alignment and positive reinforcement, the robot would learn that robbing is the fastest and cheapest way to accomplish its task. With value alignment from Quixote, the robot would be rewarded for waiting patiently in line and paying for the prescription."

While the apocalypse is seemingly far from nigh, at least we now have other options in the pipeline if Isaac Asimov's Three Laws of Robotics fail the human race.

© Copyright IBTimes 2025. All rights reserved.