Scientists create telepathic AI that can decode brain waves, read and predict your movements

The AI could reportedly be used to improve communication possibilities in severely paralysed people.

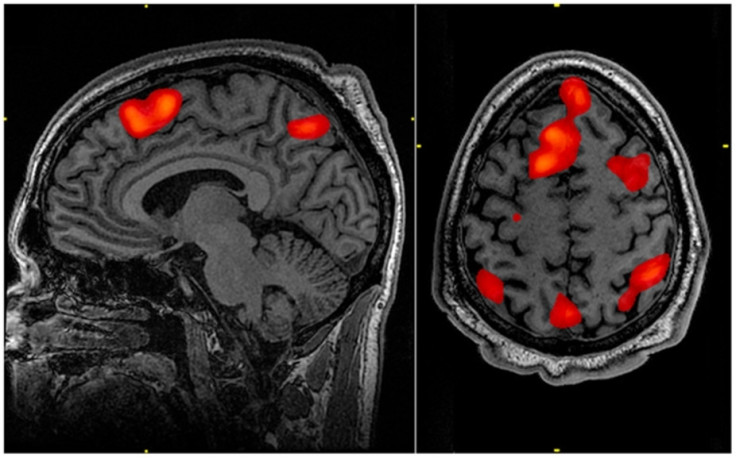

A team from the University of Freiburg in Germany has created a self learning AI that can decode and read human brain wave signals measured by an electroencephalogram or EEG.

A machine-to-human interface like this can be useful in several situations such as improving communication possibilities in a severely paralysed person. This technology can be also be used in early detection of epileptic seizures, says a report by ScienceDaily.

Using a self learning algorithm, the AI was able to not only decode brain signals related to performed movements, it was also reportedly able to read movements that were only thought of by subjects. The AI was able to read movements such as the rotation of objects in a person's brain without them actually doing it.

This AI does not work based on "predetermined brain signal characteristics", reports ScienceDaily, but adapts and works just as quickly as a system that makes use of such presets. A system or software that has such preset characteristics, according to the report, is not appropriate for every situation or person using it.

"The great thing about the program is we needn't predetermine any characteristics," said computer scientist Robin Tibor Schirrmeister, part of the research team. "The information is processed layer for layer, that is in multiple steps with the help of a non-linear function," he added.

This system, according to Schirrmeister, learns to recognise and differentiate between certain human behavioural patterns in real time.

It is reported that the model for this AI was based on the way nerve cells in the human brain works where electric signals from synapses are directed toward cell core and back again. Schirrmeister explained that the theoretical base for such a system previously existed, but it is only now that building the model has been made feasible, owing to the processing power and capabilities of current computers.

When a model's processing layers are increased, its precision and accuracy improves, according to the report. This system's processing had 31 layers in total, and researchers are calling it "Deep Learning".

"Our vision for the future includes self-learning algorithms that can reliably and quickly recognize the user's various intentions based on their brain signals," summarised head investigator Tonio Ball.

© Copyright IBTimes 2025. All rights reserved.