Tell Me Dave: Help Train a Robot to Follow Natural Language Commands By Talking to It

Although a One Poll survey in May found that some of the UK public are afraid that robots will replace them in the workplace, the fact remains that it will take a while before this happens, as robots currently are quite limited when it comes to understanding commands.

For example, if you were to ask a robot to "make a cup of coffee", it would not be able to complete the task unless you broke down every single step to making coffee, such as turning on a tap, filling a kettle with water, boiling the water, pouring it into a cup and adding coffee beans, milk and sugar.

"While there is a lot of past research that went into the task of language parsing, they often require the instructions to be spelled out in full detail which makes it difficult to use them in real world situations," the team says on its website.

To solve this problem, a team of researchers headed by Ashutosh Saxena, assistant professor of computer science at Cornell University's Robot Learning Lab, are teaching robots how to understand natural language commands in English, as well as how to account for missing information and how to adapt to different environments.

Understanding natural language commands

In order to achieve this, the robot is equipped with a 3D camera and computer vision software that helps it identify objects in its environment.

It has been trained to associate objects with their capabilities, for example, the fact that a pan can be poured into or poured from, or that a stove can heat things if you put objects on top of it.

The team have designed an algorithm that accepts natural language commands from a user and the environment in which to execute them, then translates the commands into a detailed sequence of instructions that the robot can understand.

The instructions are compiled into a verb-environment-instruction-library (VEIL) and the robot is fed an animated video simulation of the action, accompanied by recorded voice commands from several speakers.

Associating actions with commands

The more that users play with the robot and give it commands, the better the robot learns how to complete tasks, by using machine learning to get the robot's computer brain to associate commands with flexibly defined actions and remembering when it has performed an action before.

At the moment, the robot is able to perform actions correctly up to 64% of the time even if the commands are varied or the environment is changed, and the researchers are hoping to improve this by teaming up with - you.

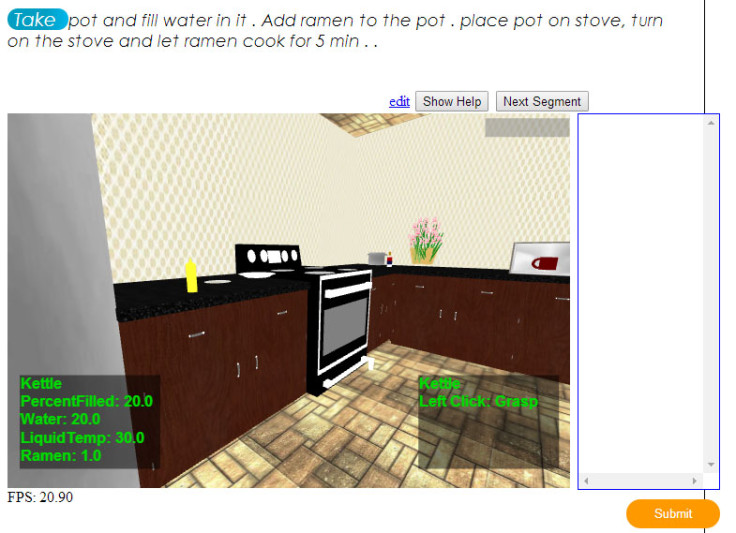

You can help teach a simulated robot how to perform tasks in the kitchen by getting an account on the Tell Me Dave website, entering instructions and trying to fulfil them in a simple 3D-animated environment.

The algorithm is detailed in the open-access paper entitled, "Tell Me Dave: Context-Sensitive Grounding of Natural Language to Manipulation Instructions".

© Copyright IBTimes 2025. All rights reserved.