Wikipedia using AI machine learning algorithms to get rid of prolific internet trolls

Researchers discovered a small group of Wikipedia editors were responsible for 9% of all online abuse.

Computer scientists from the Wikimedia Foundation and Alphabet's Jigsaw (formerly the Google Ideas tech incubator) have demonstrated that artificial intelligence can be used to help moderate user comments left on Wikipedia and figure out which users are behind personal attacks, as well as why exactly they decide to engage in online abuse.

The researchers built machine learning algorithms and used them to analyse a huge trove of comments made by users in 2015 on the website. To give you a point of comparison, 63 million comments have been made on Wikipedia talk pages, which are used to discuss improvements to articles.

The algorithms were trained using a small batch of 100,000 toxic comments that had first been classified by 10 different human users to make it clear what type of personal attacks were happening in user comments. So, for example, the algorithm was able to differentiate between direct personal attacks, ie. a comment like "You suck!", a third party personal attack like "Bob sucks!" and indirect personal attacks like "Henry said Bob sucks".

The results have been published in a paper entitled 'Ex Machina: Personal Attacks Seen at Scale', which will be presented at the 26th International World Wide Web Conference 2017 that will take place in Perth, Australia from 3-7 April 2017.

Only a few users made up 9% of all online abuse

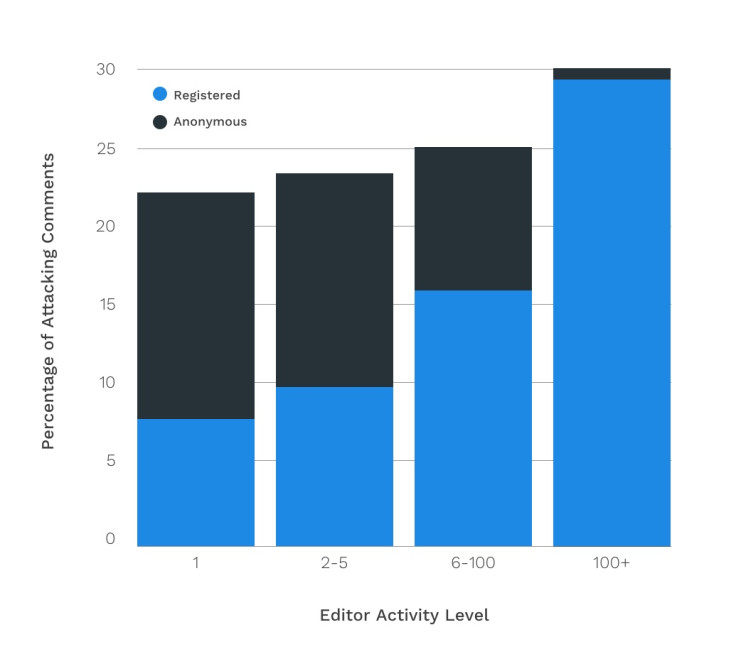

The analysis showed that anonymous users made up 43% of all the comments left on Wikipedia, and that many of these users did not comment very often, sometimes not more than once. Anonymous users were six times more likely to launch personal attacks, than registered users, however, there are 20 times more registered users than anonymous users, which means that over half of the personal attacks were still being carried out by registered users.

The researchers noted that most of the time, users were fine, and 80% of personal attacks came from over 9,000 users who each made less than five attacking comments, showing that people only engaged in online abuse when they got annoyed.

However, there was a small group of 34 active users who the researchers rated as having a "toxicity level of more than 20", and these users were responsible for almost 9% of all the personal attacks on the site.

Clearly these users are trolls – people who go onto the internet to start fights and launch personal attacks for fun – and the good news is that the algorithms have made it much easier for Wikipedia to identify these people and block them to get rid of a chunk of the online abuse, without needing to block all users who get angry during a heated discussion, or to manually trawl through comments looking for patterns of abuse.

Humans still needed to calm down fights that break out

Online fights can start very quickly like wildfire, so sometimes human moderators would still be a better solution to letting computers figure out who to block from the Wikipedia talk pages.

"These results suggest the problems associated with personal attacks do not have an easy solution. However, our study also shows that less than a fifth of personal attacks currently trigger any action for violating Wikipedia's policy," the researchers conclude in the paper.

"Moreover, personal attacks cluster in time – perhaps because one personal attacks triggers another. If so, early intervention by a moderator could have a disproportionately beneficial impact."

But perhaps the future of comments moderation on websites might involve more artificial intelligence, as the researchers point out that human moderators could greatly benefit from a computer system that can automatically sort comments so that you can get a much clearer overview of how healthy Wikipedia conversations are, as well as to quickly flag potentially provocative comments for review.

© Copyright IBTimes 2025. All rights reserved.