Facebook using artificial intelligence to spot suicidal users and offer real-time prevention

New algorithm can identify users at risk and reach out with rapid support.

Facebook has developed artificial intelligence tools to identify warning signs of suicidal users and will activate real-time prevention and support simply by looking at the language they've used in posts.

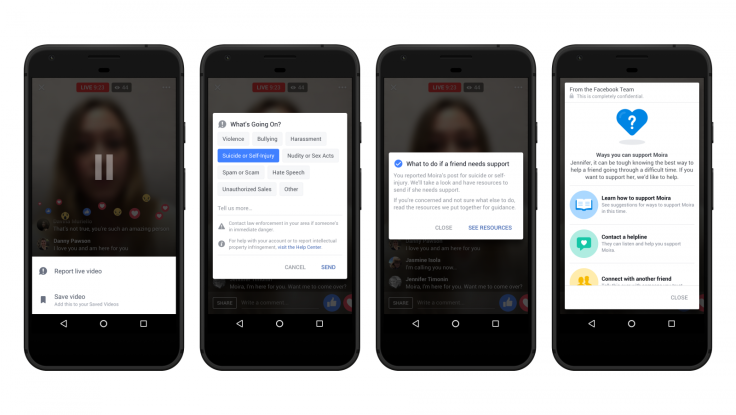

The recent increase of people broadcasting their suicides using Facebook Live has set into action algorithms that will be able to pick out certain words and phrases in individual posts and comments from friends. The pattern recognition process could pick up key phrases such as "I'm worried about you" and rapidly send the posts to a human operations team, who will quickly review them and provide support.

The algorithms have been trained using text based on posts that were previously identified as being from users who were at risk. From this they are able to flag certain content to streamline the reporting process.

The initiative comes just weeks after Facebook CEO Mark Zuckerberg released a 5,500-word manifesto on the company's future and how it can be used to help humanity. The suicide watch AI program is part of three-pronged approach to tackle the issue alongside a new feature allowing users to flag worrying Facebook Live behaviour to the social network, as well as manual reporting tools that were introduced in 2016.

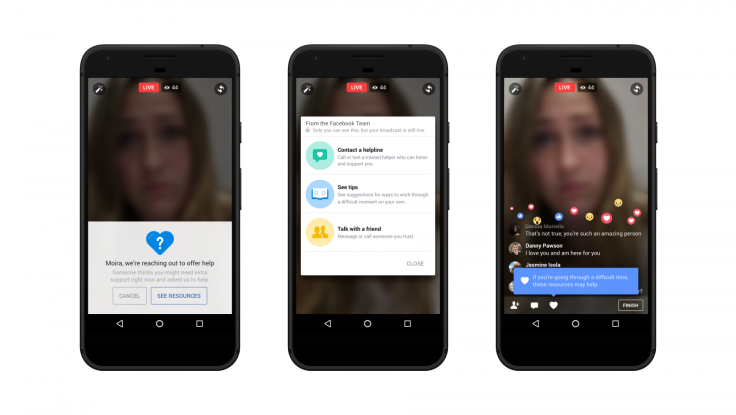

Those identified as being at risk will see contact details for support services appear on their screen and the ability to talk to Facebook's staff on Facebook Messenger for more help. Other services include a pre-populated text to make it easier for someone to start a conversation, however it is believed Facebook's use of AI for suicide prevention is the first of its kind.

Zuckerberg wrote in his manifesto: "There have been terribly tragic events — like suicides, some live streamed — that perhaps could have been prevented if someone had realised what was happening and reported them sooner." Since then Facebook has been working with suicide prevention experts to roll out these new tools.

"We have teams working around the world, 24/7, who review reports that come in and prioritise the most serious reports like suicide. We provide people who have expressed suicidal thoughts with a number of support options. For example, we prompt people to reach out to a friend and even offer pre-populated text to make it easier for people to start a conversation," read a Facebook blog post.

A number of suicides live streamed on Facebook have shocked users, including a British man and 14-year-old girl from the US who hanged themselves in separate incidents. Others have witnessed overdoses and a man from LA shoot himself while in his car.

"Addressing problems such as mental health, depression and suicide is an incredibly tough and sensitive issue and can be difficult for people to speak about and ask for help. It is positive, therefore, to see companies such as Facebook using AI on their platform to identify people in need," said Claire Stead, Online Safety Expert from Smoothwall.

"Online protection is of vital importance in this digital age and needs to be taken as seriously as protection 'offline'. But equally, it must be done in a way that does not hinder people's freedom. Smart, contextual monitoring is the only way to let people use the internet freely, whilst being protected. It needs to be a combined effort from organisations, schools and family members if we are to truly help people seek the support they need when getting through difficult times," Stead added.

The Samaritans provides a free support service for those who need to talk to someone in the UK and Republic of Ireland. It can be contacted via Samaritans.org or by calling 116 123 (UK) or 116 123 (ROI), 24 hours a day, 365 days a year.

If you or someone you know is suffering from depression, please contact a free support service atMind.org.uk or call 0300 123 3393. Call charges apply.

© Copyright IBTimes 2025. All rights reserved.