How hackers could steal your voice to access your bank account

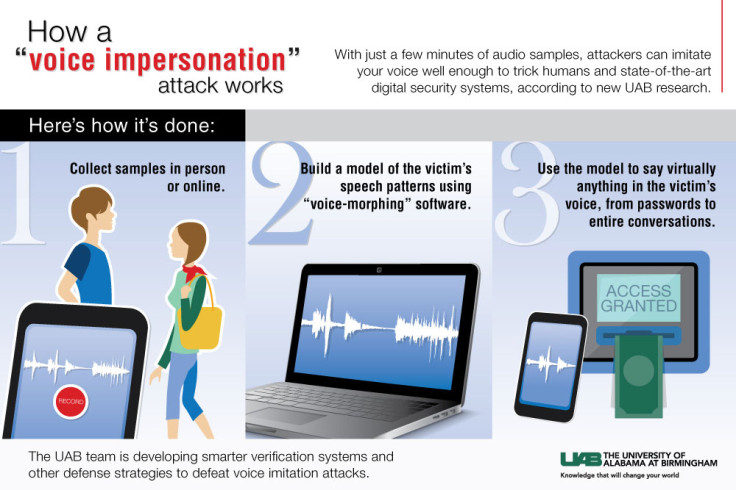

Hackers could be looking to steal your voice and use it to carry out online theft after researchers discovered voice-based user authentication systems are vulnerable to voice impersonation attacks. Cyber criminals are constantly looking for new opportunities to gain access to personal data and what you say in public or videos you post online could offer everything they need to claim your identity.

The University of Alabama at Birmingham, US discovered they were able to penetrate automated and human voice verification systems by capturing speech and using a simple, off-the-shelf, voice-morphing tool. The study highlights how it could be used for access to bank accounts, identity theft or even to damage somebody's reputation. It also uncovers how vulnerable we are to leaving our information around without us knowing.

"People often leave traces of their voices in many different scenarios. They may talk out loud while socialising in restaurants, giving public presentations or making phone calls, or leave voice samples online," said Nitesh Saxena, the director of the Security and Privacy In Emerging computing and networking Systems (SPIES) lab and associate professor of computer and information sciences at UAB.

With just a few minutes of voice samples captured by hackers standing close-by to your public conversations with a recording device, performing a cold call, or scanning videos you may have posted to social media or YouTube, it could fool an automated or human system.

The researchers used voice morphing to turn the limited number of speech snippets they retrieved to be able to transform the attacker's voice so they could say anything they needed.

They used the process to firstly trick an automated voice-authentication system, which uses biometrics to authenticate that individual based on unique characteristics of their speech. They managed to achieve a success rate of around 80% to 90% against state-of-the-art systems you would normally see employed by banks or credit card companies. The report states some governments use voice authentication to gain access to buildings, which poses a national security threat if it can be fooled using voice-morphing technology.

Can a voice impersonation hack fool a human?

In another area of the research, they tested the morphed voices against human-based authentication systems – where they are speaking to an actual person on the other end. To do this, they decided to use celebrity voices, in this case Morgan Freeman and Oprah Winfrey, to effectively say anything they wanted.

When tested against a human, it was less effective but still found that they managed to convince the person on the other end around 50% of the time. The implications of being able to fake somebody's voice, especially a high-profile person, opens up the threat of character assassination.

"For instance, the attacker could post the morphed voice samples on the internet, leave fake voice messages to the victim's contacts, potentially create fake audio evidence in the court and even impersonate the victim in real-time phone conversations with someone the victim knows," Saxena said. "The possibilities are endless."

After it was announced that Chinese hackers were allegedly responsible for the theft of 5.6 million fingerprints it is clear biometric data is hot property due to the number of devices it is used with. Combined with the evidence of this research, it will no doubt be of concern to both individuals over their personal data, governments over national security and corporations who may have invested hefty sums in their automated authentication algorithms.

With voice-morphing technology bound to improve, researchers state there must be the development of systems that can identify and resist such attacks by identifying a live speaker. The team at the University of Alabama is now researching ways to employ a defence strategy for this.

© Copyright IBTimes 2025. All rights reserved.