IBM and Xerox predict we will soon control smartphone apps with just our brains

The concept of telepathy or moving objects with one's mind might still be beyond us but there is no reason why we will not be able to use our brains to navigate smartphone menus and apps, completely hands-free, one day.

Researchers from IBM and Xerox have published a paper examining the work being done to create software that can be controlled by the brain, which they believe will be the next big thing, due to the plethora of increasingly powerful smartphones and the rise of electroencephalography (EEG) headsets.

EEG is a technology that involves attaching numerous small sensors to the scalp in order to record and pick up electrical P300 signals by looking at patterns of electrical activity in the brain. Traditionally, the technology has been used primarily in the medical field to diagnose and monitor types of seizures for epilepsy treatment and monitor brain function in intensive care patients.

However, EEG could also have a wide number of consumer applications. Since 2010, several firms such as Australia's Emotiv and US-based NeuroSky have been developing brain-computer interfaces (BCI), creating lightweight EEG headsets that can do everything from helping coaches improve the mental state of US Olympic archers, to controlling skateboards and playing video games with your mind.

Making calls and typing messages from the mind

But the technology is also being used by computer scientists to build mind control apps for smartphones, such as NeuroPhone – a phone dialler application created by Cornell University that works with the Emotiv EPOC headset and an iPhone to let users call phone contacts using their minds.

The app works by randomly flashing images of contacts in front of the user and the trigger to dial the number on the smartphone is controlled by the user either winking their eyes or thinking that they want to speak to that particular contact. Laboratory tests have shown that the wink trigger has a 92-95% accuracy rate and works well even in a noisy environment, but the trigger that uses P300 brain signals had a lower accuracy rate.

Zhejiang University in China has also previously tested a virtual typewriting app that could be used by users to answer SMS text messages in their heads. Using a virtual keyboard with both English alphabets and Chinese characters, test subjects were able to type out messages with 80% accuracy by focusing on specific targets, while the Neuroscan Quik-Cap system recorded their P300 brain signals.

Mind-controlled exoskeletons for disabled patients

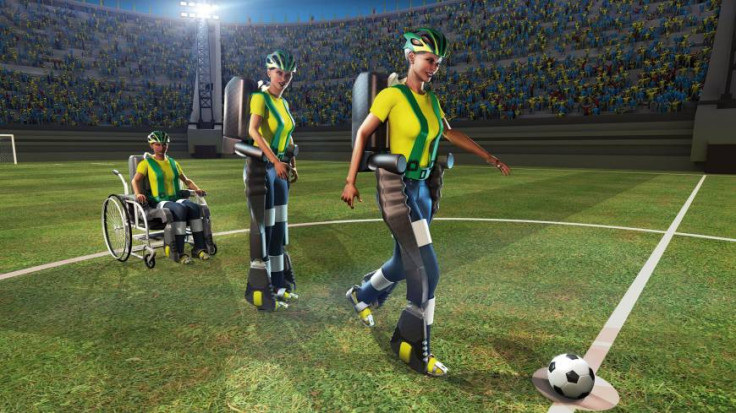

There is also a lot of work being done into using P300 brain signals to control non-invasive prosthesis such as a prosthetic limb, as well as robotic exoskeleton suits to help paraplegics walk, like the one developed by Dr Miguel Nicolelis of Duke University and Dr Gordon Cheng of TU Munich that was showcased at the 2014 World Cup opening ceremony in Brazil.

At the moment, lightweight EEG headsets have not quite taken off, but IBM and Xerox are positive it is only a matter of time before the technology will be ready for widespread use.

"The use of BCI for mobile holds promise, as it would be useful for developing both assistive and general entertainment technology for the evolving mobile user," the researchers wrote.

"Developments in the fields of computational neurology, signal processing, machine learning coupled with the increasing availability of computational power on the mobile device along with cloud storage and processing paves the way for development of more BCI-based mobile applications for the ordinary user."

The paper, Brain Computer Interfaces For Mobile Apps: State-Of-The-Art And Future Directions, is an open-access paper published in the Cornell University Library repository.

© Copyright IBTimes 2025. All rights reserved.