MIT scientists develop camera that removes reflections so you can take photos through windows

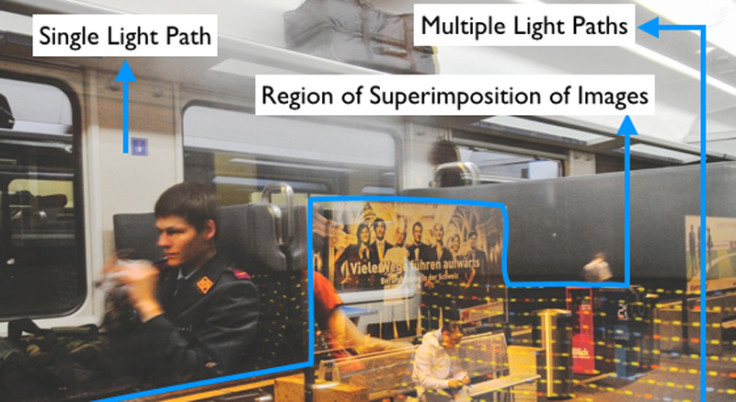

Computer scientists from MIT, in collaboration with Microsoft Research, have developed a new camera system that is able to remove reflections so that clear photographs can be captured even when taken through glass.

At the moment, no cameras are able to detect and remove reflections as it takes too much time, and for such a device to work, it would need to be capable of removing the reflections at the speed of light. To get around this, researchers from MIT Media Lab's Camera Culture Group used the Fourier transform to aid them in clever signal processing.

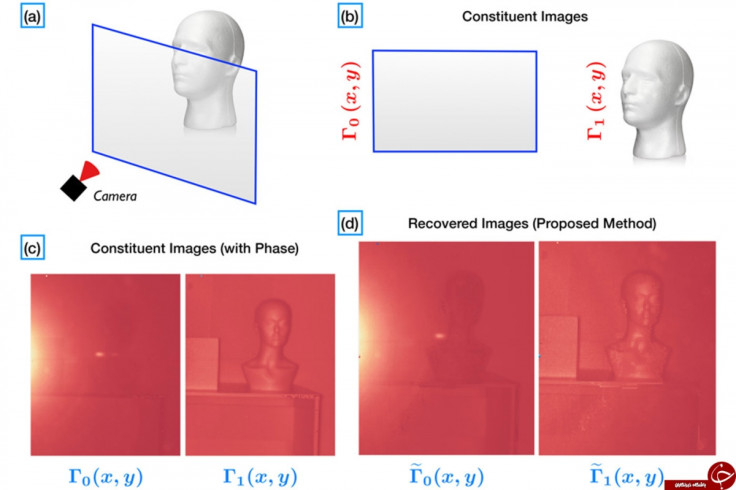

Fourier transform is an advanced mathematical concept that can break down any signal or function into an alternate representation of waveforms on a graph that clearly shows the different frequencies that make up the signal. So, for example, if there are two different light signals – one coming from a glass window pane and one coming from fluorescent lighting overhead – that arrive at the camera's light sensor at slightly different times, then their Fourier decompositions will have slightly different phases.

Figuring out how to measure phases from light signals

But conventional camera light sensors cannot measure phase, only the intensity of the light particles that hit against the sensor, so the researchers had to figure out how to make targeted measurements of intensity that can be used to reconstruct information about the phases from different light signals.

By modifying an off-the-shelf Microsoft Xbox One Kinect camera sensor that is used for gaming, the researchers created a new camera that deliberately emits light of specific frequencies and then gauges the intensity of the light particles from the reflections. Algorithms developed by the researchers then look at the information from the camera and put it together with information from several other reflectors positioned between the camera and the object or item being photographed.

Looking at the final information, the algorithms enable the system to deduce what the exact phase of the returning light is, and separate signals from different depths, using a technique from X-ray crystallography called "phase retrieval" that won the Nobel Prize for chemistry in 1985.

Camera can remove reflections in one minute

Theoretically, if you wanted to take a photo of an object behind a glass pane, the camera should only need to emit two light frequencies, but in practice, the camera cannot emit pure light frequencies. So to filter out any noise, the researchers make the camera system cycle through 45 different light frequencies to make sure all reflection is separated from the image, which currently takes one minute of exposure time to achieve.

"The interesting thing is that we have a camera that can sample in time, which was previously not used as machinery to separate imaging phenomena," said Ayush Bhandari, a PhD student in the MIT Media Lab and first author on the new paper.

The open-access paper, entitled Phase retrieval approach to reflection cancelling cameras: Time-resolved image demixing is published on MIT's website and was presented at the Institute of Electrical and Electronics Engineers (IEEE)' International Conference on Acoustics, Speech, and Signal Processing (ICASSP 2016) in Shanghai, China on 25 March.

© Copyright IBTimes 2025. All rights reserved.