Stephen Hawking is really worried about artificial intelligence

A look back at what the physicist has said about artificial intelligence.

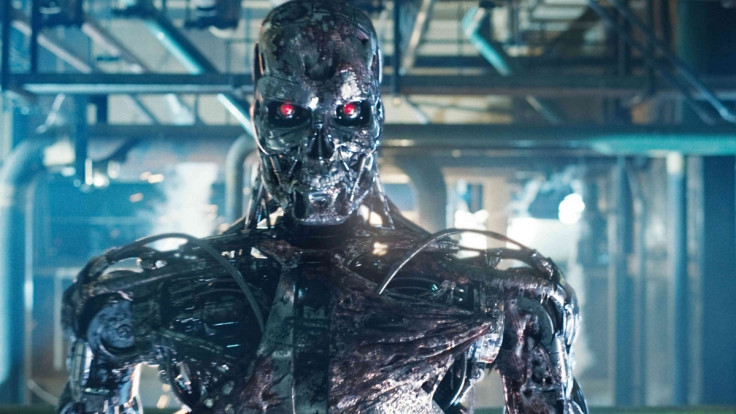

Stephen Hawking has warned artificial intelligence could wipe out mankind if we are not careful about it. Speaking at the launch of the Leverhulme Centre for the Future of Intelligence in Cambridge, the physicist said AI has the potential to develop a will of its own that could place it in direct conflict with humans, Terminator style.

However, he also said if we carry out enough research, it could also be used to eradicate disease and poverty. "I believe there is no deep difference between what can be achieved by a biological brain and what can be achieved by a computer. It therefore follows that computers can, in theory, emulate human intelligence – and exceed it," he said.

"Success in creating AI could be the biggest event in the history of our civilisation. But it could also be the last unless we learn how to avoid the risks.

"Alongside the benefits, AI will also bring dangers, like powerful autonomous weapons, or new ways for the few to oppress the many. It will bring great disruption to our economy. And in the future, AI could develop a will of its own – a will that is in conflict with ours.

"In short, the rise of powerful AI will be either the best, or the worst thing, ever to happen to humanity. We do not know which."

In his speech, he said the research being done at the Leverhulme Centre was imperative to the future of our species. "We spend a great deal of time studying history, which, let's face it, is mostly the history of stupidity," he said. "So it is a welcome change that people are studying instead the future of intelligence."

But this is not the first time Hawking has spoken out about the potential dangers of AI. Here are some of his previous comments on the subject.

January 2016

Ahead of his BBC Reith Lectures, Hawking said the biggest threats to humanity are ones we engineer ourselves: "We are not going to stop making progress, or reverse it, so we have to recognise the dangers and control them. I'm an optimist, and I believe we can."

October 2015

In a Reddit AMA, AI was a topic of focus for many users who wanted to know Hawking's thoughts on its future. Responding, he said: "The real risk with AI isn't malice but competence. A super-intelligent AI will be extremely good at accomplishing its goals, and if those goals aren't aligned with ours, we're in trouble.

"You're probably not an evil ant-hater who steps on ants out of malice, but if you're in charge of a hydroelectric green energy project and there's an anthill in the region to be flooded, too bad for the ants. Let's not place humanity in the position of those ants. Please encourage your students to think not only about how to create AI, but also about how to ensure its beneficial use."

October 2015

Hawking said computers could take over from humans in the next 100 years if we are not careful in an interview with Spanish newspaper El Pais. "Computers will overtake humans with AI at some point within the next 100 years," he said. "When that happens, we need to make sure the computers have goals aligned with ours."

December 2014

Following an upgrade to his communication system in 2014, Hawking was asked about the developments in AI. He said he was concerned about the potential for computers to become self-aware and turn on us.

"It would take off on its own, and re-design itself at an ever increasing rate. Humans, who are limited by slow biological evolution, couldn't compete, and would be superseded."

May 2014

Writing for the Independent, Hawking warned of an uncertain future regarding AI. "AI research is now progressing rapidly. Recent landmarks such as self-driving cars, a computer winning at Jeopardy! and the digital personal assistants Siri, Google Now and Cortana are merely symptoms of an IT arms race fuelled by unprecedented investments and building on an increasingly mature theoretical foundation. Such achievements will probably pale against what the coming decades will bring.

"One can imagine such technology outsmarting financial markets, out-inventing human researchers, out-manipulating human leaders, and developing weapons we cannot even understand. Whereas the short-term impact of AI depends on who controls it, the long-term impact depends on whether it can be controlled at all."

© Copyright IBTimes 2025. All rights reserved.