Twitter removed extremist content glorifying Nice attack within minutes

Counter Extremism Project says 50 Twitter accounts praising the attack used the hashtag 'Nice' in Arabic.

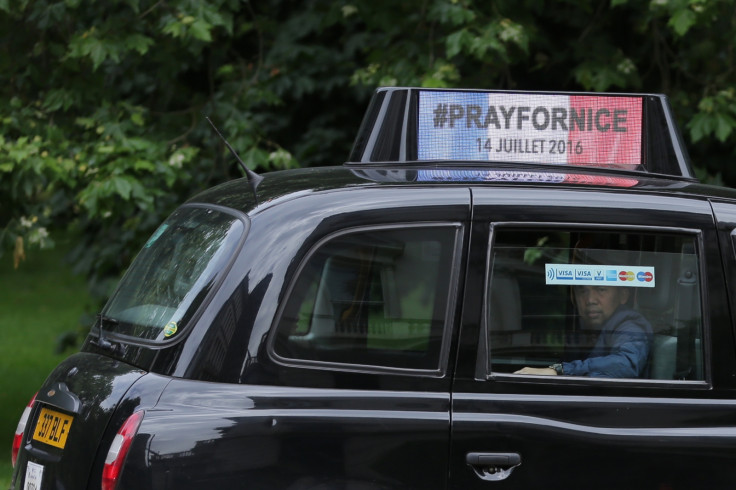

Twitter reacted quickly and removed all posts shared by Islamic State (Isis) extremists celebrating the truck attack in Nice, France. As many as 84 people were killed after a Tunisian man drove a truck through a crowd that had gathered to watch the Bastille Day fireworks display in Nice on 14 July.

The authorities were trying to find out whether the attacker, who has been named as Mohamed Lahouaiej Bouhlel, had any links with IS (Daesh) or not.

According to Counter Extremism Project– an organisation that reports extremist content online – 50 Twitter accounts that glorified the attack used the term Nice as hashtags in Arabic. Most of the accounts appeared soon after the attack took place and shared images celebrating the attack.

"Twitter moved with swiftness we have not seen before to erase pro-attack tweets within minutes. It was the first time Twitter has reacted so efficiently," Counter Extremism Project said in a statement.

A similar stance was adopted by Twitter after the Paris and Brussels attacks. However, the company was fastest to respond this time around and removed all extremist content immediately, according to a Reuters report.

Twitter has been banning provocative or violent content from its site. Since mid-2015, the micro-blogging site has suspended more than 125,000 accounts for "threatening or promoting terrorist acts", related to Islamist groups. Additionally, it has increased the size of its team that reviews propaganda reports. Apart from monitoring accounts similar to those that have been reported, it launched spam-fighting tools to uncover violating accounts for further review.

Although there was no official comment on whether or not any accounts were suspended, Twitter said that it "condemns terrorism and bans it". Not only Twitter, social media giant Facebook and other firms also have been working hard to remove posts that violate their terms of services.

Facebook has outlined a set of Community Standards explaining its users with the type of content they are allowed to share on its social network. The policies ban terrorism and content related to it such as posts and images to promote violence or praise acts of terror.

"One of the most sensitive situations involves people sharing violent or graphic images of events taking place in the real world. In those situations, context and degree are everything," the Menlo Park-headquartered company said in a blog post.

"For instance, if a person witnessed a shooting, and used Facebook Live to raise awareness or find the shooter, we would allow it. However, if someone shared the same video to mock the victim or celebrate the shooting, we would remove the video."

Facebook relies on users and other advocacy groups to report extremist content, which is then reviewed by its editorial team to decide whether to delete the post or not. A spokeswoman from the company said the reviewers get specific guidance about how to deal with the reported graphic images and content.

© Copyright IBTimes 2025. All rights reserved.