Biden's AI Executive Order Set To Create New Standards For AI Safety

The recently signed AI executive order requires new safety assessments, equity and civil rights guidance.

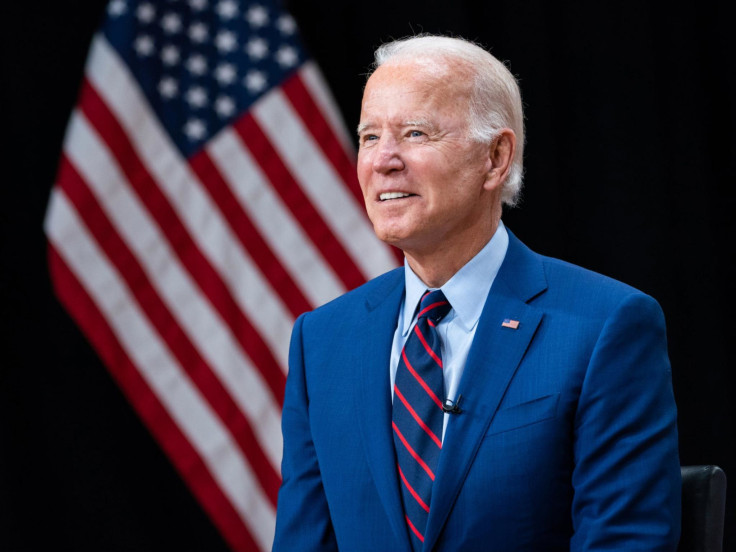

US President Joe Biden has unveiled an all-new executive order on AI (artificial intelligence). The recently signed executive order encompasses research on AI's impact on the labour market, equity and civil rights guidance, as well as new safety assessments.

Law enforcement agencies are preparing to apply existing law to abuses of AI. Likewise, Congress is sparing no effort to learn more about the technology and introduce new laws.

US President Joe Biden signed an executive order seeking to reduce the risks that artificial intelligence poses to Americans https://t.co/TgWo4CcaZ2 pic.twitter.com/acoRr2puvq

— Reuters (@Reuters) October 31, 2023

The executive order, on the other hand, is expected to have an immediate impact. A senior administration official told reporters that the new executive order "has the force of law".

The new executive order has 8 goals

Introduction of new safety and security standards for AI: Some AI companies will have to share safety test results with the federal government. This will enable the government to create guidance for AI watermarking.

Protecting consumer privacy: This involves providing guidelines to help agencies evaluate privacy techniques used in AI.

Promoting equity and civil rights: This can be achieved by providing guidance to landlords and federal contractors.

Protecting consumers overall: The Department of Health and Human Services will create a program that will evaluate AI-related healthcare practices that appear potentially harmful. Moreover, this step involves creating resources on how AI tools can be used responsibly by educators.

Supporting workers: To support workers, a report on the potential labour market implications of AI can be produced. Aside from this, the federal government could find new ways to support workers who are affected by a disruption to the labour market.

Innovation and competition: The government can expand the grants for AI research in multiple areas like climate change and modernise the criteria for skilled immigrant workers with expertise to stay in the US.

International partners: The government can work with international partners to implement AI standards around the world.

Lastly, the new executive order can come in handy for developing guidance for federal agencies' use and procurement of AI.

Biden's new AI executive order: What to expect?

In his statement, White House Deputy Chief of Staff Bruce Reed noted that the order represents "the strongest set of actions any government in the world has ever taken on AI safety, security, and trust".

Furthermore, the order is based on the voluntary pledges the White House previously secured from Microsoft, Google and OpenAI. Notably, the order also comes ahead of an AI safety summit hosted by the U.K.

The senior administration official pointed out that fifteen major US-based tech giants are willing to implement voluntary AI safety commitments. However, he believes that's not enough.

Joe Biden says he'll be introducing an executive order to regulate AI, because he's concerned about it being used to spread fake news and be used for deep fakes.

— Free Speech America (@FreeSpeechAmer) October 30, 2023

The Biden Administration considers everything they disagree with fake news. pic.twitter.com/j3Xlk3T87G

According to him, the new executive order is a step towards a more concrete regulation for AI's development.

"The President, several months ago, directed his team to pull every lever, and that's what this order does: bringing the power of the federal government to bear in a wide range of areas to manage AI's risk and harness its benefits," the official said.

In a speech at the White House, Biden said he will meet Senate Majority Leader Chuck Schumer, D-N.Y. and a bipartisan group formed by Schumer. He said the meeting is to "underscore the need for congressional action".

"This executive order represents bold action, but we still need Congress to act," Biden explained. The executive order will force big tech giants to share safety test results with the US government before the official release of AI systems.

The executive order also focuses on the National Institute of Standards and Technology's development of standards for AI "red-teaming" or stress-testing, which mimics a real-life attacker trying to breach an organisation's cyber defences.

It is worth noting that a new study claims AI tools like ChatGPT can actually help hackers produce malicious code to launch cyber attacks.

The new order says it is imperative to evaluate how agencies collect and use commercially available data. This includes data purchased from data brokers.

© Copyright IBTimes 2025. All rights reserved.