DeepMind develops first AI capable of learning tasks independently

The first ever artificial intelligence (AI) computer program capable of learning tasks independently has been developed by Google's DeepMind.

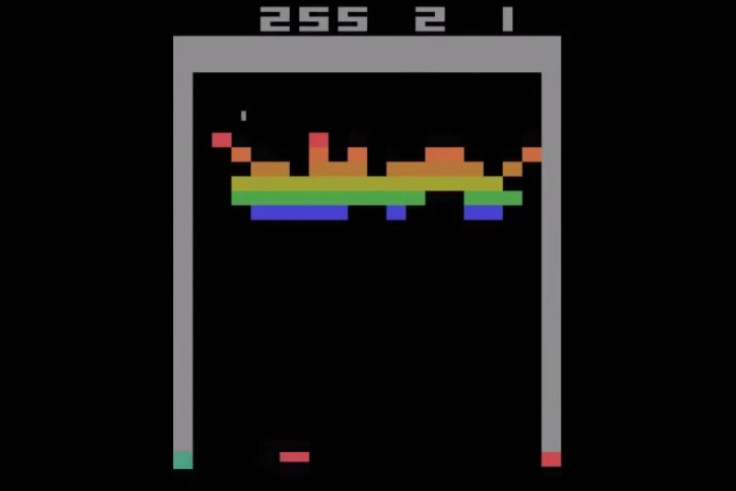

The London-based subsidiary used a general learning algorithm to play a selection of Atari video games without having the game's rules programmed into its software.

Instead it was able to learn how to play the game through reinforcement learning by examining the game pixel-by-pixel and assessing which moves would generate the highest score.

In 23 out of the 49 different games, DeepMind's algorithm was able to beat a professional human player's top score.

"This is the first significant rung of the ladder towards proving a general learning system can work," said Demis Hassabis, founder of DeepMind.

"It's the first time that anyone has built a single general learning system that can learn directly from experience. The ultimate goal is to build general purpose smart machines - that's many decades away.

"But this is going from pixels to actions, and it can work on a challenging task even humans find difficult. It's a baby step, but an important one."

DeepMind was acquired by Google last year for a reported sum of £300 million and it is unclear what the tech giant plans to do with advanced AI technology.

Some academics have warned that it could be used on Google's vast data sets to serve up targeted ads to users of its products like Gmail, Android or its widely-used search engine.

"You can use reinforcement learning methods to improve ad quality," said Jürgen Schmidhuber from the Dalle Molle Institute for Artificial Intelligence Research in Manno, Switzerland.

"You learn to place ads that are more likely to be clicked on, which means higher rewards. This is presumably one of their motivations."

Details of the AI system were published in the journal Nature on Wednesday (25 February).

© Copyright IBTimes 2025. All rights reserved.