Godfathers of technology Believe AI Firms Must Be Held Responsible for Harm They Cause

Experts will gather at Bletchley Park, in Buckinghamshire, next week for a summit on AI safety after 23 experts signed a document claiming it was "utterly reckless" to pursue ever more powerful AI systems.

AI companies must be held responsible for harms caused by their products, a group of senior experts, including two "godfathers" of the technology have warned.

A document signed by 23 tech experts claimed it was "utterly reckless" to pursue ever more powerful AI systems before understanding how to make them safe.

The intervention was made as international politicians, tech companies, academics and civil society figures prepare to gather at Bletchley Park, in Buckinghamshire, next week for a summit on AI safety.

The government says the meeting aims to consider the risks of AI, especially at the frontier of development, and discuss how they can be mitigated through internationally coordinated action.

Stuart Russell, professor of computer science at the University of California, Berkeley, said "It's time to get serious about advanced AI systems."

"There are more regulations on sandwich shops than there are on AI companies," he added.

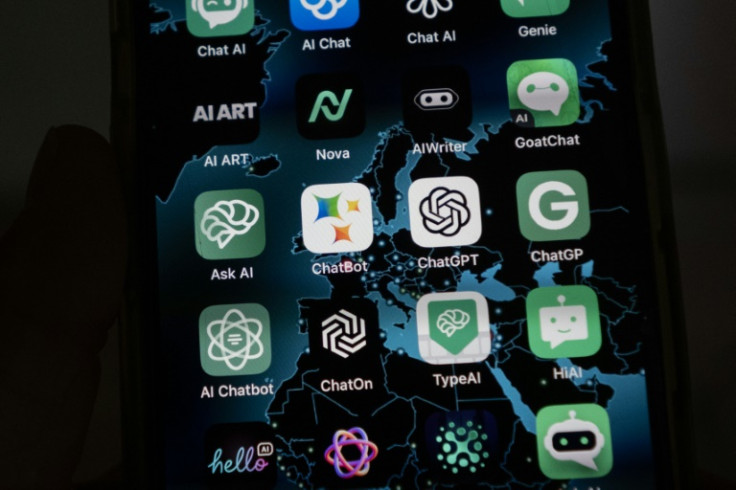

Since the creation of the natural language processing tool ChatGPT in November 2022 thrust artificial technology into the mainstream discourse, it has caused significant excitement as well as alarm at the potential for AI to harm the human race.

Fears over the rapid development of artificial intelligence systems even prompted billionaire Tesla and Twitter boss Elon Musk to join hundreds of experts in expressing concern at the advancement of powerful AI systems.

In a letter issued by the Future of Life Institute, the growing concerns of AI's advancement were expressed, stating: "They should be developed only once we are confident that their effects will be positive and their risks will be manageable."

This latest document, which outlines the dangers of unregulated AI development, also lists a range of measures that governments and companies should take to address AI risks.

These include:

- Governments allocate one-third of their AI research and development funding, and companies one-third of their AI R&D resources, to safe and ethical use of systems.

- Giving independent auditors access to AI laboratories.

- Establishing a licensing system for building cutting-edge models.

- AI companies must adopt specific safety measures if dangerous capabilities are found in their models.

- Making tech companies liable for foreseeable and preventable harms from their AI systems.

Other co-authors of the document include Geoffrey Hinton and Yoshua Bengio, two of the three "godfathers of AI", who won the ACM Turing Award – the computer science equivalent of the Nobel prize – in 2018 for their work on AI.

"Recent state-of-the-art AI models are too powerful, and too significant, to let them develop without democratic oversight," said Bengio.

"It (investments in AI safety) needs to happen fast because AI is progressing much faster than the precautions taken," he said.

Currently, there is no broad-based regulation focusing on AI safety, and the first set of legislation by the European Union is yet to become law as lawmakers are yet to agree on several issues.

The UK government has, so far, supported a fairly tolerant approach to the use of AI.

In March, it released a White Paper outlining its stance on the technology.

It said that rather than enacting legislation it was preparing to require companies to abide by five "principles" when developing AI. Individual regulators would then be left to develop rules and practices.

However, this position appeared to set the UK at odds with other regulatory regimes, including that of the EU, which set out a more centralised approach, classifying certain types of AI as "high risk".

And at the G7 Summit earlier this year, Sunak signalled his government may take a more cautious approach to the technology, by leading on "guard rails" to limit the dangers of AI".

But Sunak's vision for the world's first AI safety convention appears to be faltering before it's even begun.

One of the executives invited has warned that the conference risks achieving very little, accusing powerful tech companies of attempting to "capture" the landmark meeting.

Connor Leahy, the chief executive of the AI safety research company Conjecture, said he believed heads of government were poised to agree on a style of regulation that would allow companies to continue developing "god-like" AI almost unchecked.

Two sources with direct knowledge of the proposed content of discussions say that its flagship initiative will be a voluntary global register of large AI models – an essentially toothless initiative.

Its ability to capture the full range of leading global AI projects would depend on the goodwill of large US and Chinese tech companies, which don't generally see eye to eye.

Furthermore, sources close to negotiations say that the US government is annoyed that the UK has invited Chinese officials (and so are some members of the UK's ruling Conservative Party).

The attendee list hasn't been released, but leading companies and investors in the UK's domestic AI sector are angry that they've not been invited, cutting them out of discussions about the future of their industry.

With the summit beginning next Wednesday, Sunak will be hoping it marks more than just a missed opportunity to ease fears over unregulated AI development.

© Copyright IBTimes 2025. All rights reserved.